AI system classification

Saidot enables you to classify your systems based on

Based on overall risk level with manual selection

Based on inherited risk level from components

Based on the EU AI Act, using specific automation

Based on the level of importance

AI system classification in general helps the organisation to

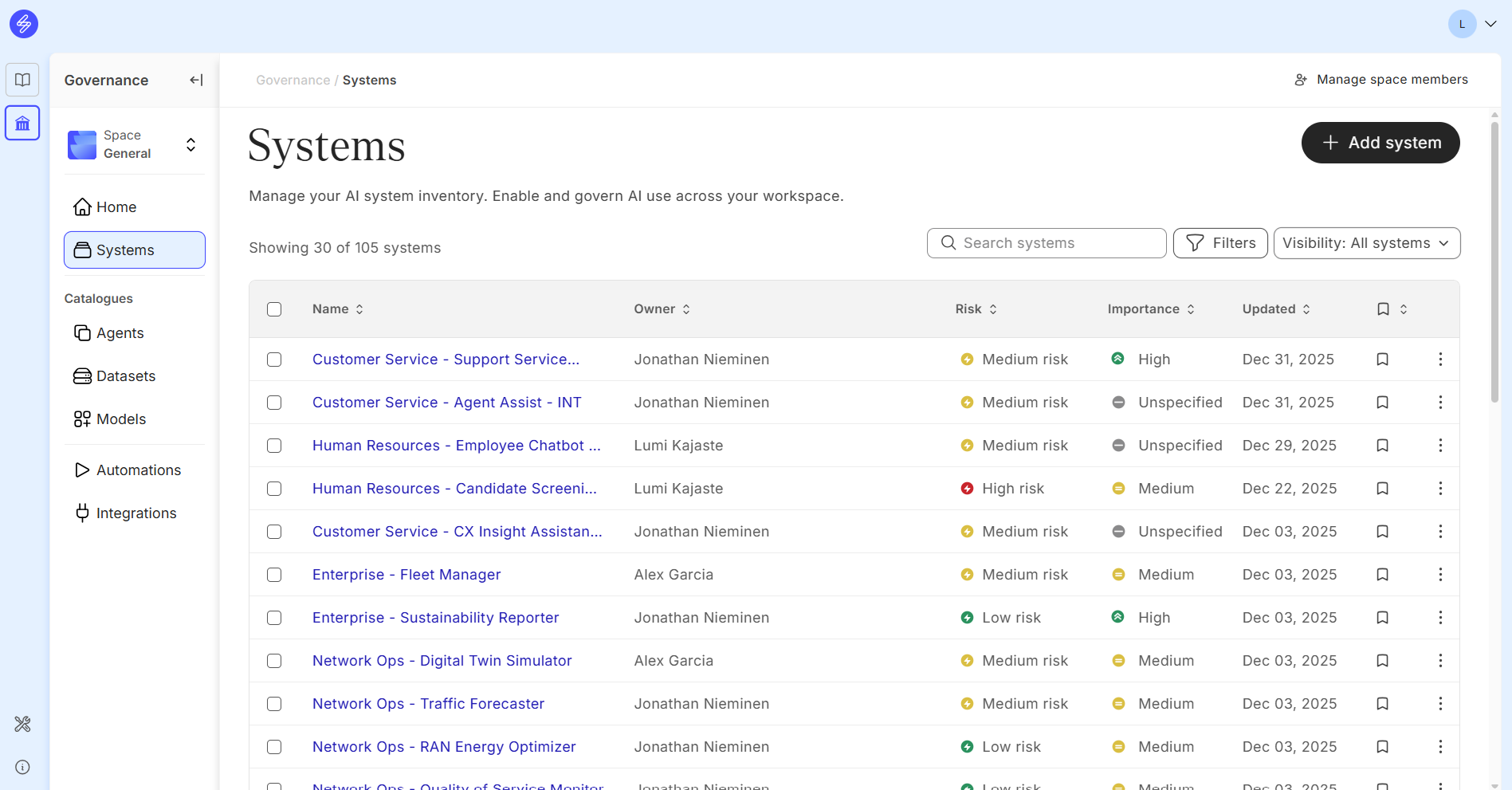

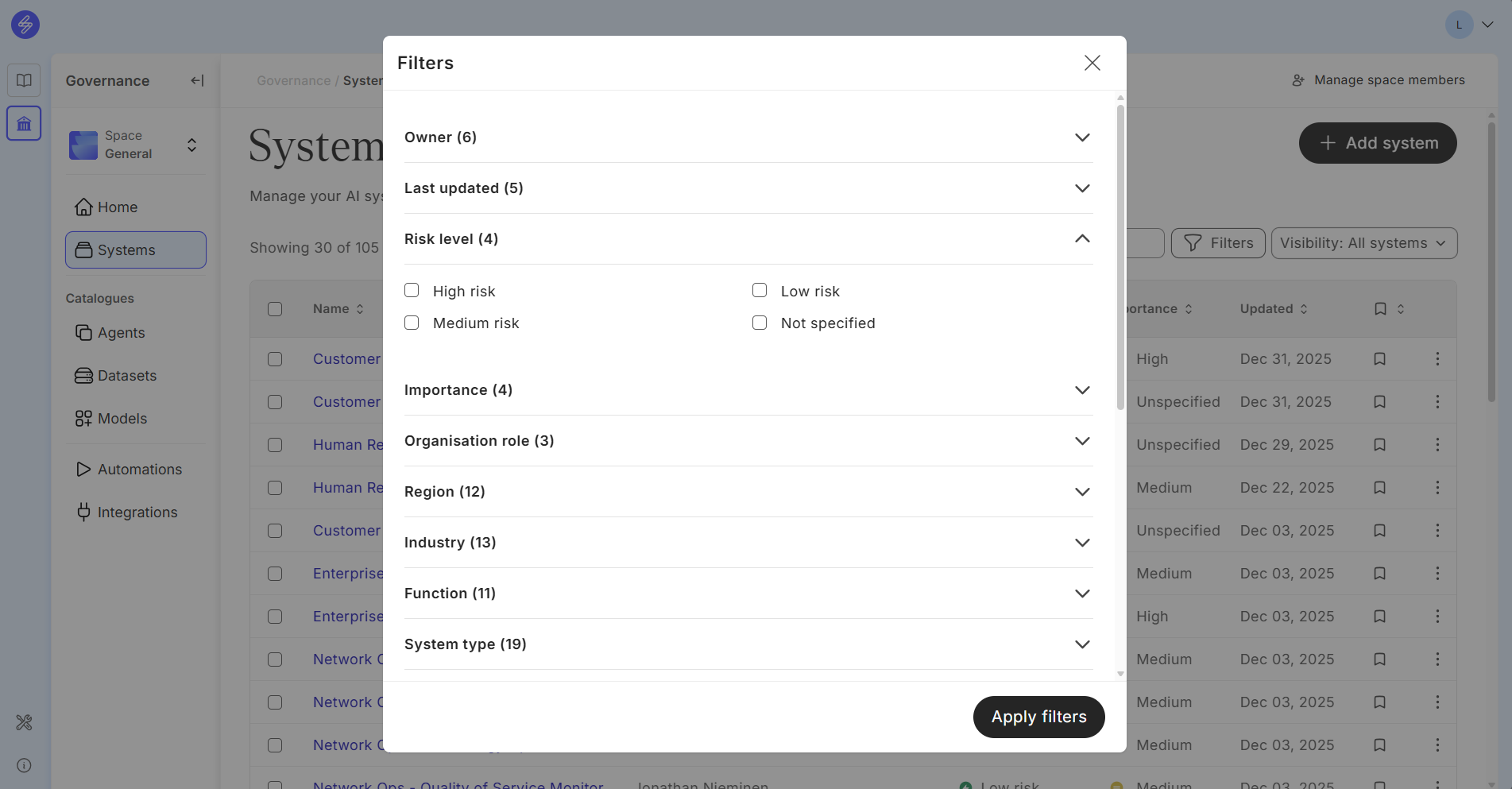

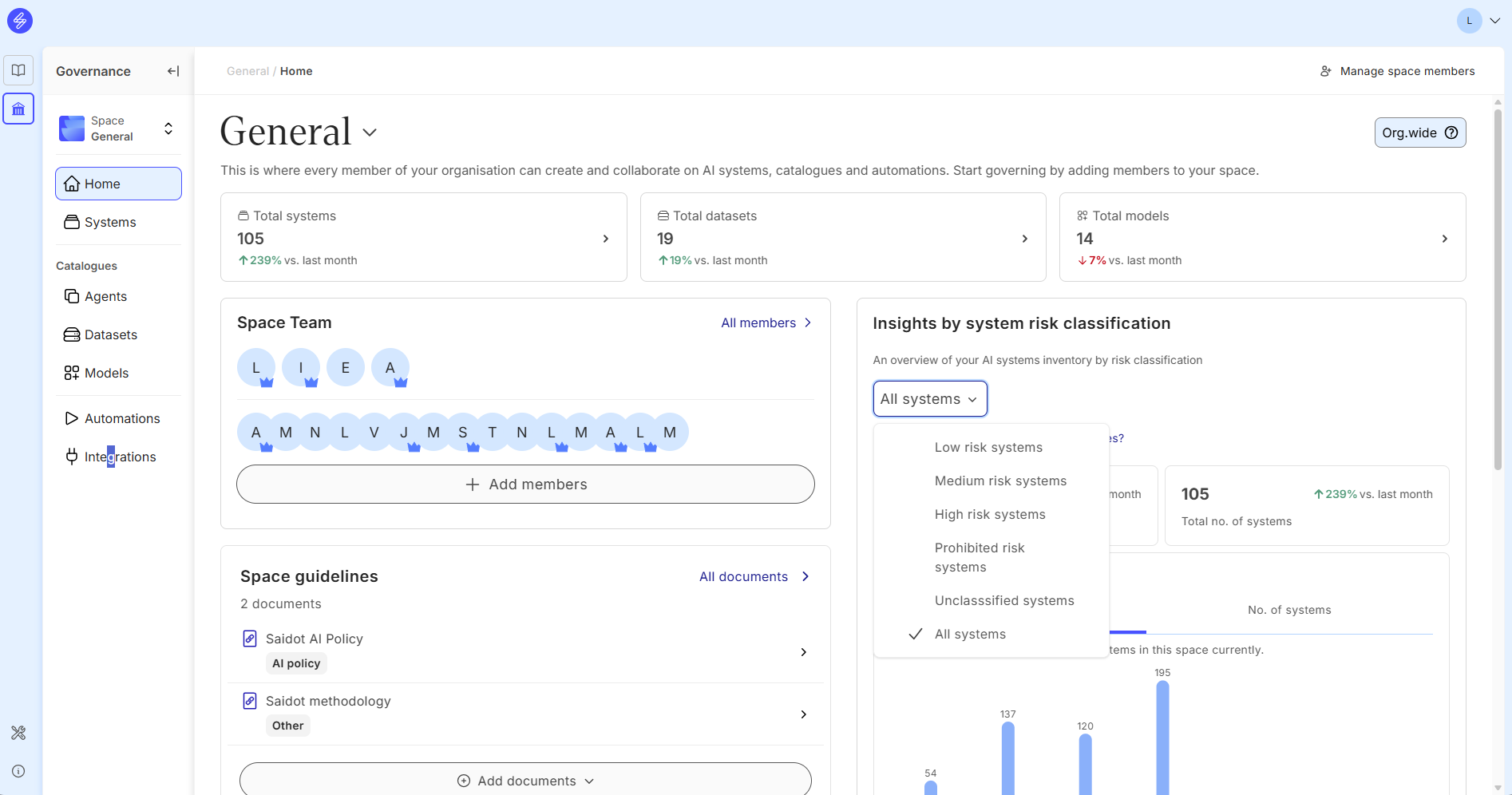

Analyse, filter and search AI systems from the Inventory

Focus governance effort to the AI systems with higher risk level (right-size governance)

Review and audit AI system specific governance

Define risk level based documentation standard and governance tasks

Balance risk level with expected benefits and importance to the business

Manage AI system inventory and portfolio

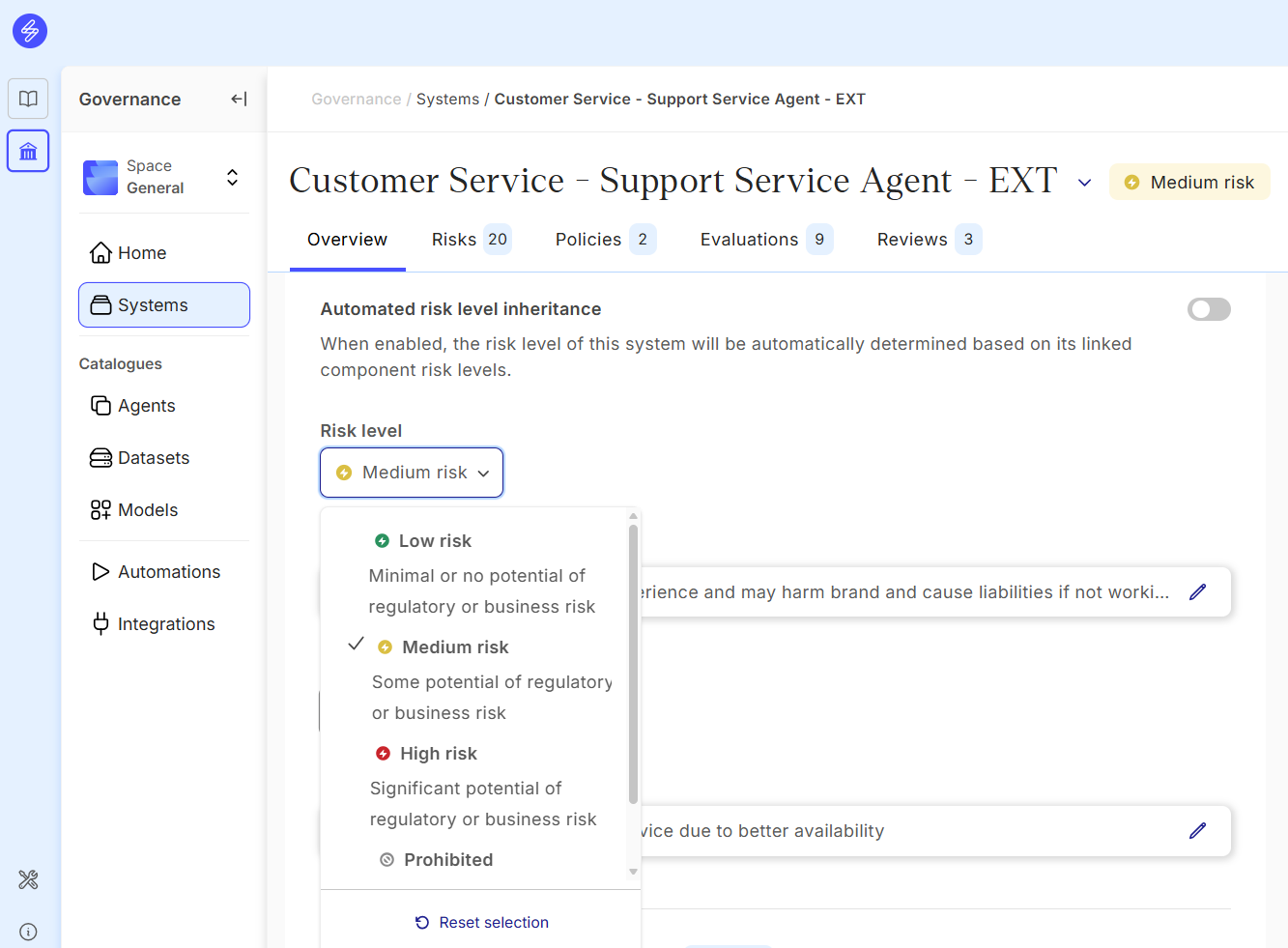

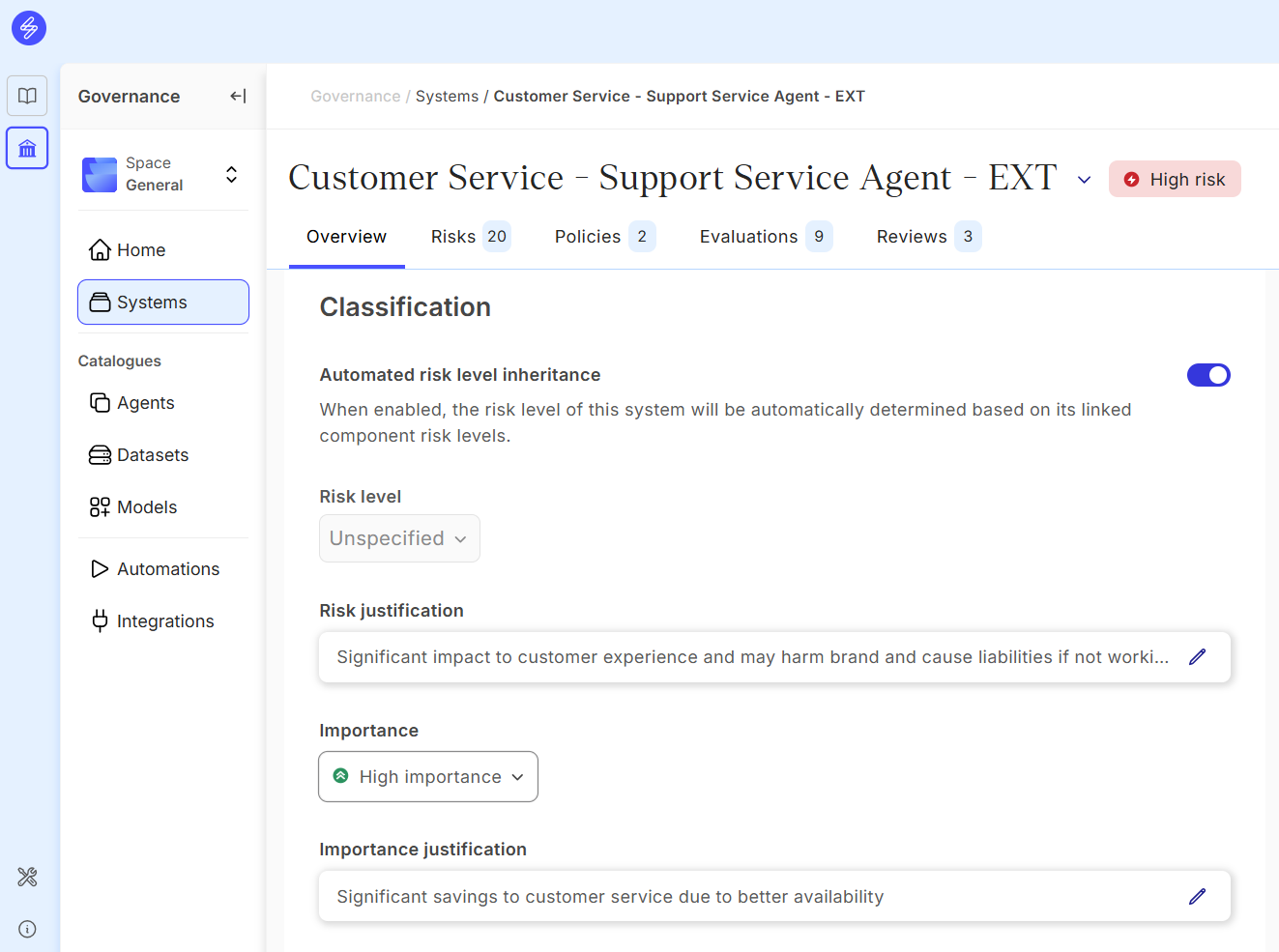

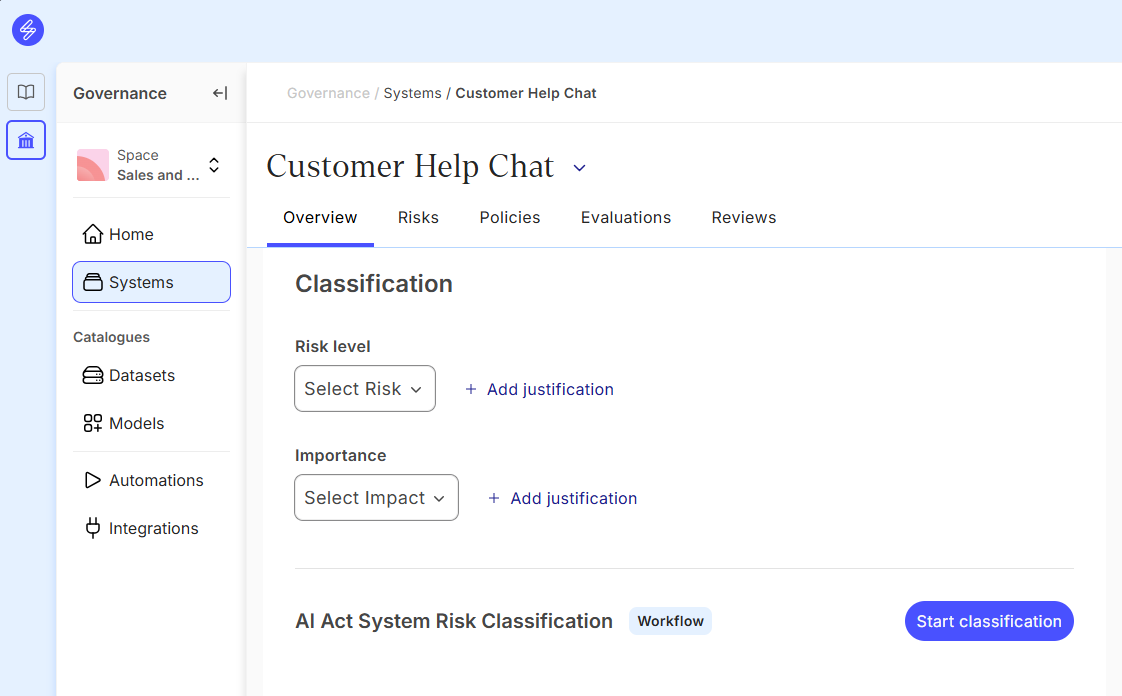

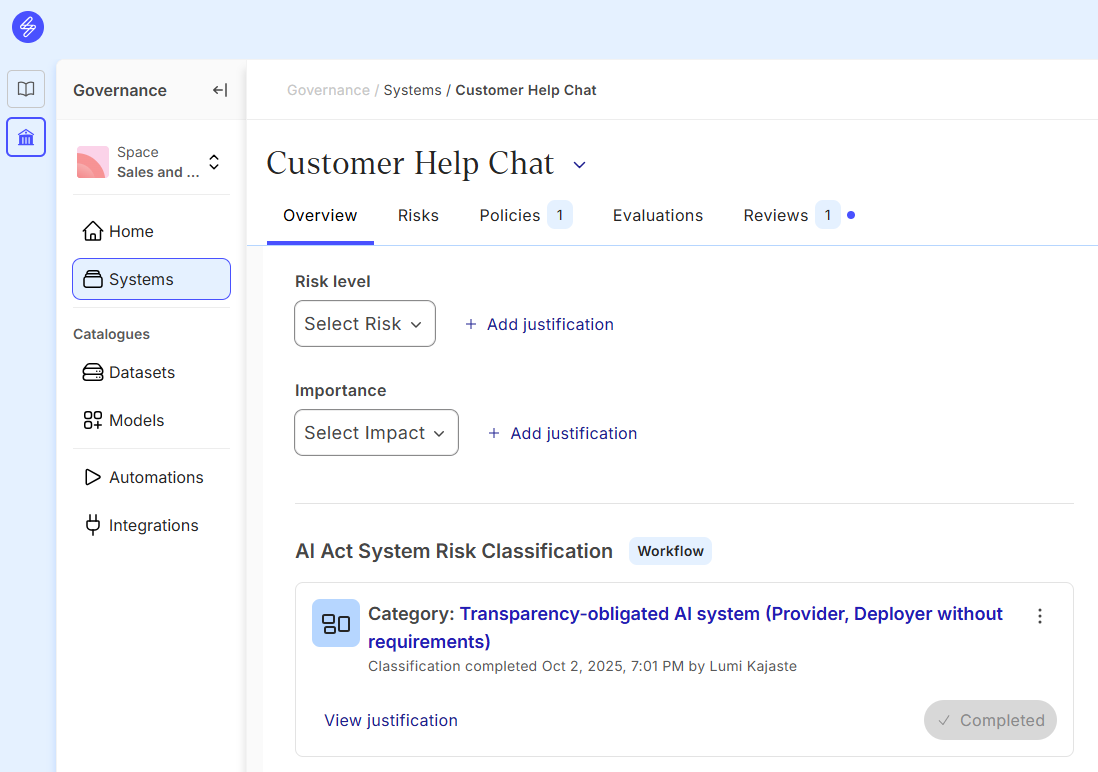

Defining the risk level manually

The overall risk level of the AI system can be defined manually by selecting from the alternatives:

Low risk (Minimal or no potential regulatory of business risk)

Medium risk (Some potential of regulatory or business risk)

High risk (Significant potential of regulatory or business risk)

Prohibited (The AI system is prohibited due to regulatory or business risk)

We recommend to analyse and define the company-specific criteria for general AI system risk classification. The criteria can take into consideration a wide variety of security, privacy, copyright, business, contractual, cost or regulatory factors that impact the overall risk classification. The risk level can be justified.

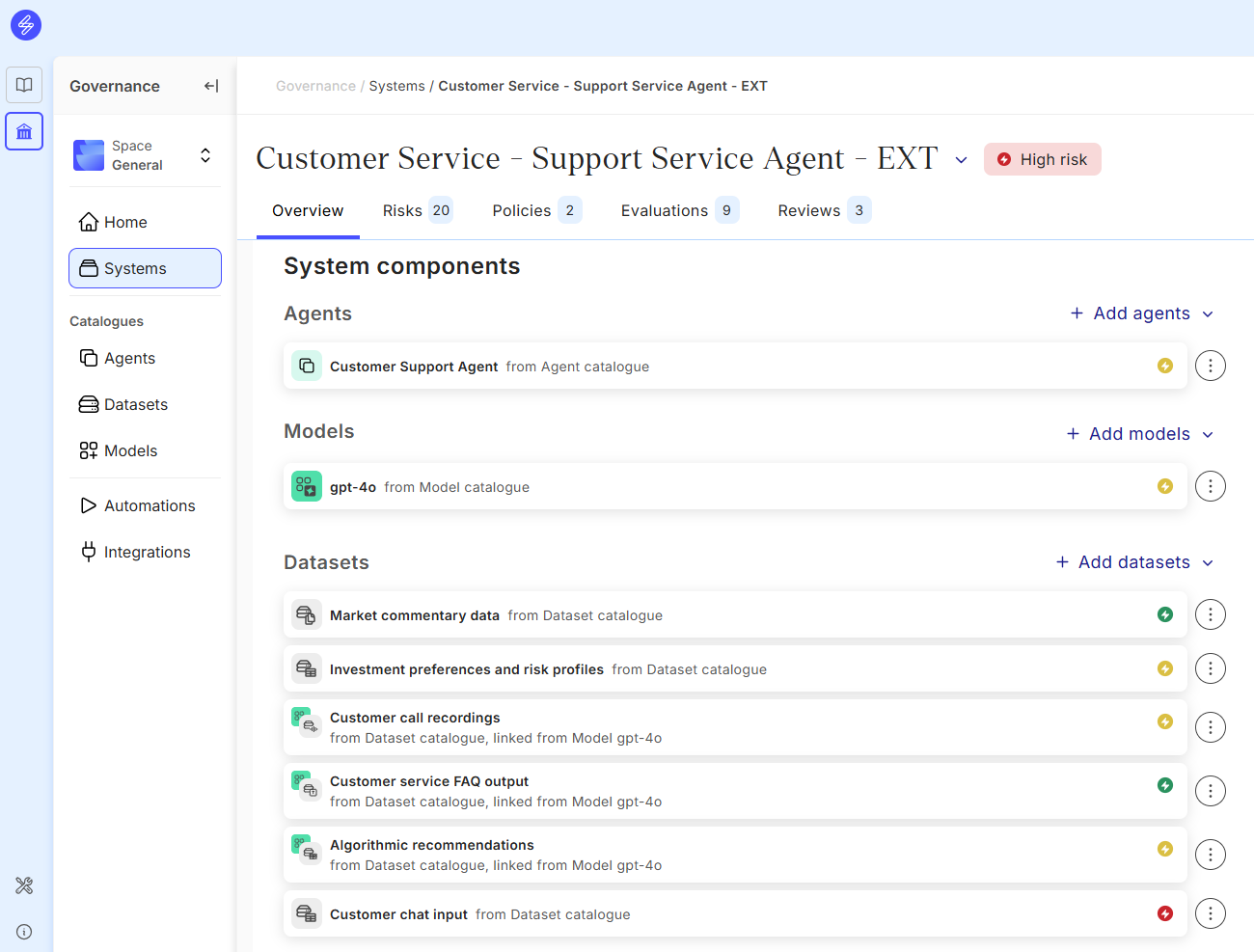

Inheriting the risk level automatically

Members can enable automatic risk level inheritance from the system components. This automation determines the risk level based on system components. When turned on, the classification will change to the highest component specific risk classification.

If an AI system has different risk classifications for components, the AI system risk classification is determined based on the highest value. When changing an AI System component risk level, the AI system risk level is changed automatically. This way, the source of the risk level can be identified and managed. The AI system owner can for example eliminate the component that is causing higher risk level or make changes to the component to decrease the risk level.

Adjusting risk level of the components

All the components in the Model Agent and Data Catalogues can be classified according to their risk level. We recommend also to analyse the factors impacting the risk level of each component type and create a guideline for users to ensure that classification is done consistently.

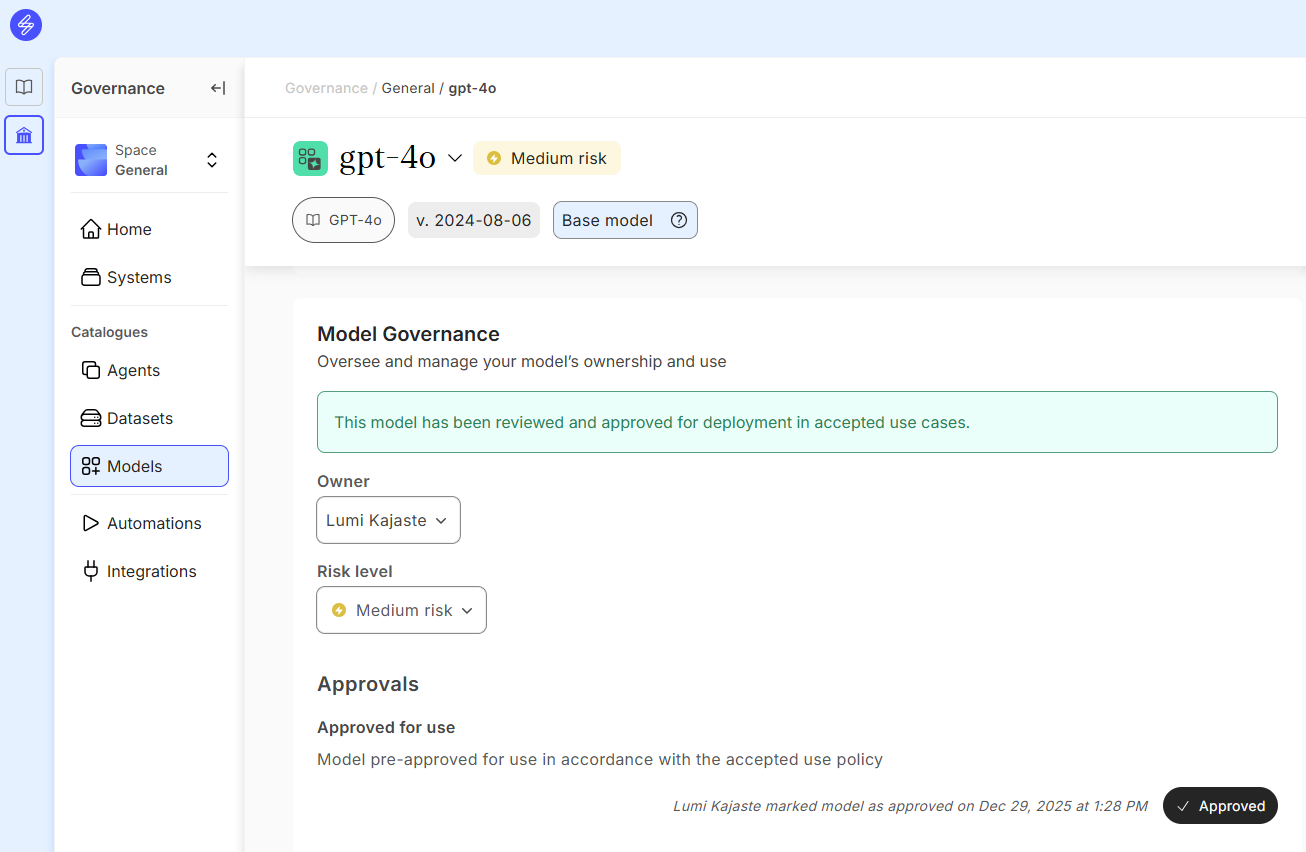

Model risk level

Model risk level can be adjusted manually in the model card.

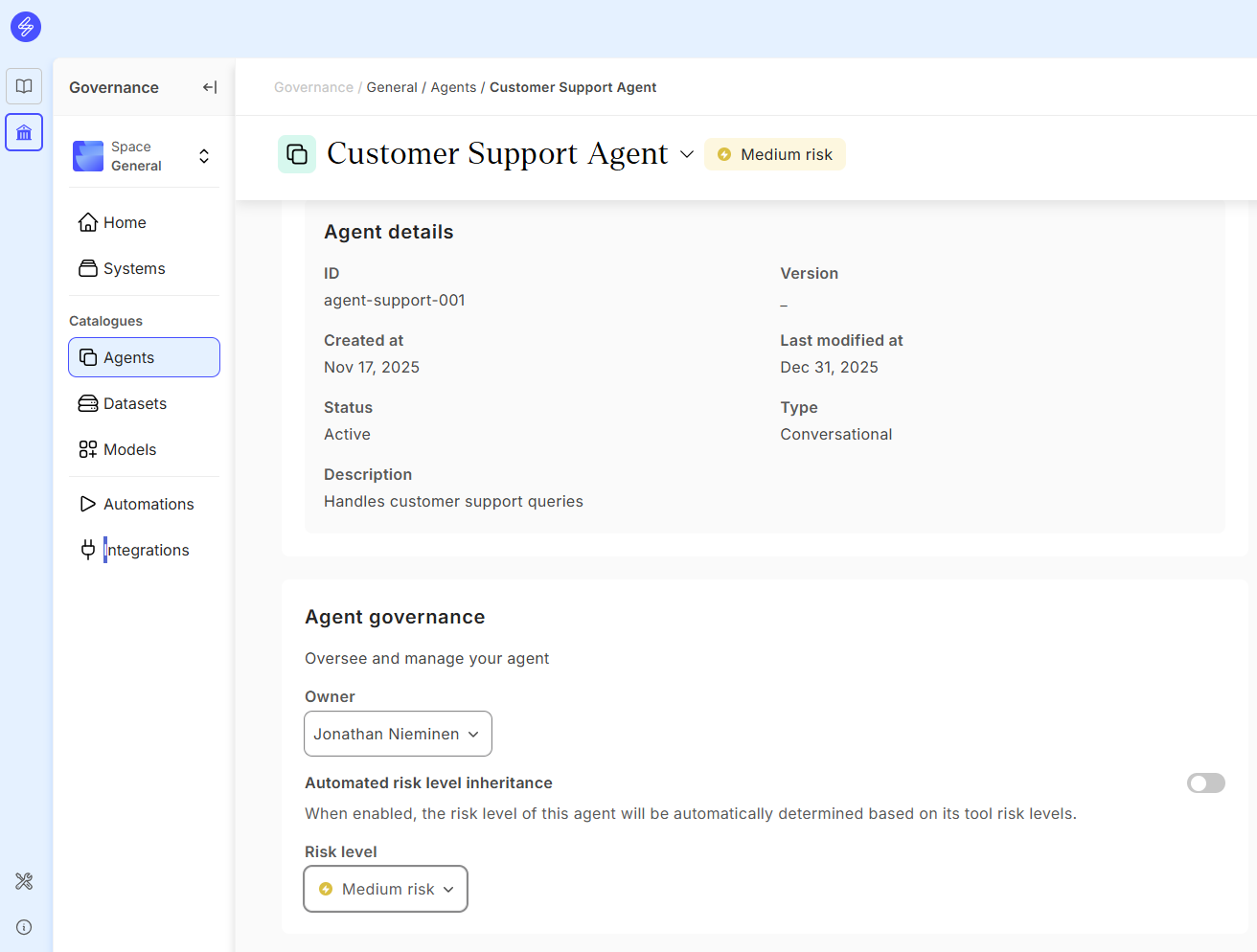

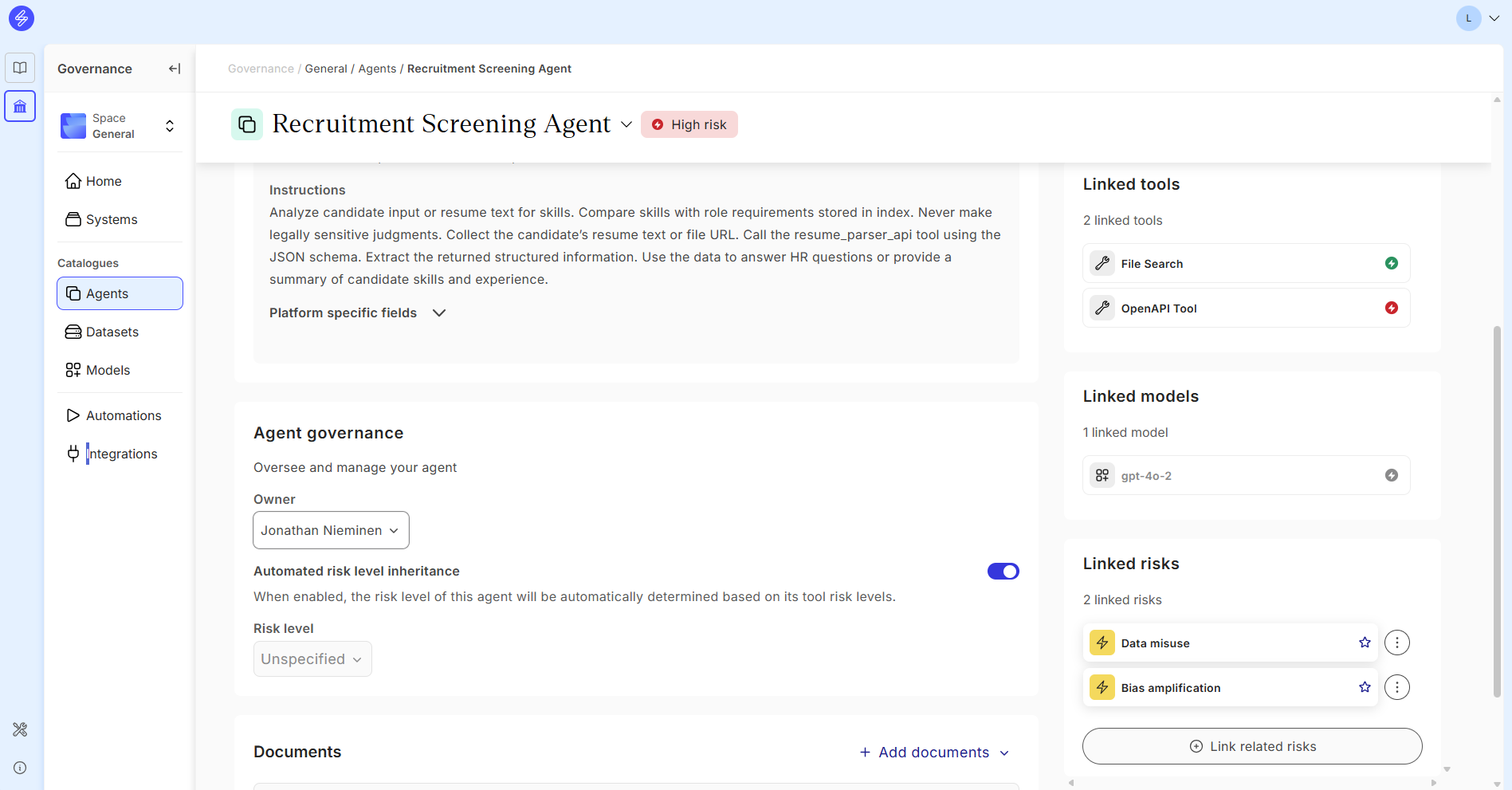

Agent risk level

Agent risk level can be adjusted manually or automatically based on Agent tool access and Tool risk level. The risk level inheritance automation can be turned on or off in the Agent card. Adjusting the agent risk level manually is needed for example when there are no Tools linked to the Agent or the Agent risk level differs from the one inherited from the tools.

When automated risk level inheritance is turned on and enabled using the toggle, the agent risk level will be automatically determined based on it’s tool risk levels. If the tool risk level changes, it will automatically change the agent risk level. If the Tools have no risk levels specified, the Agent risk level will be unspecified.

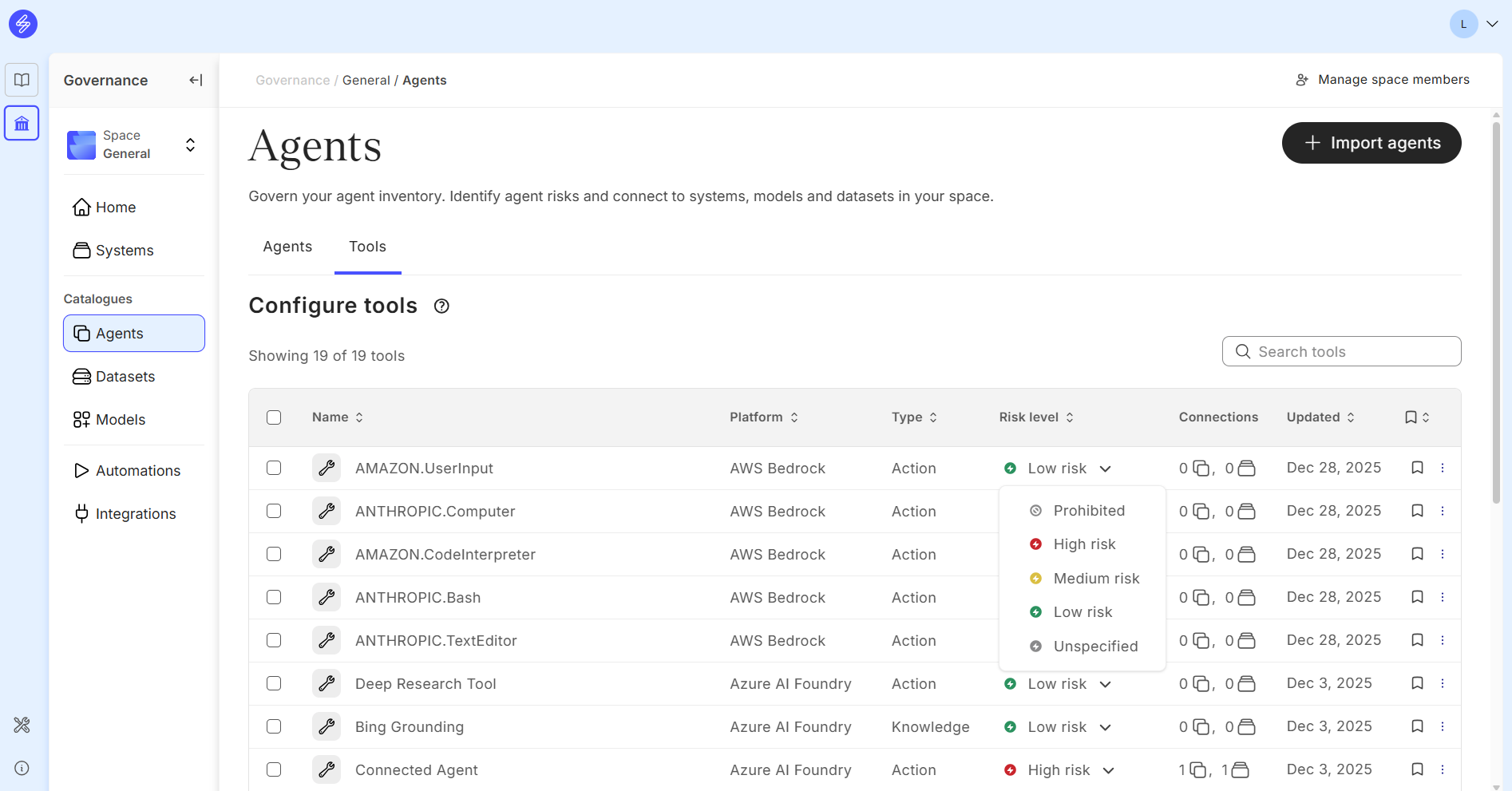

The tool risk levels can be adjusted directly in the Tools view.

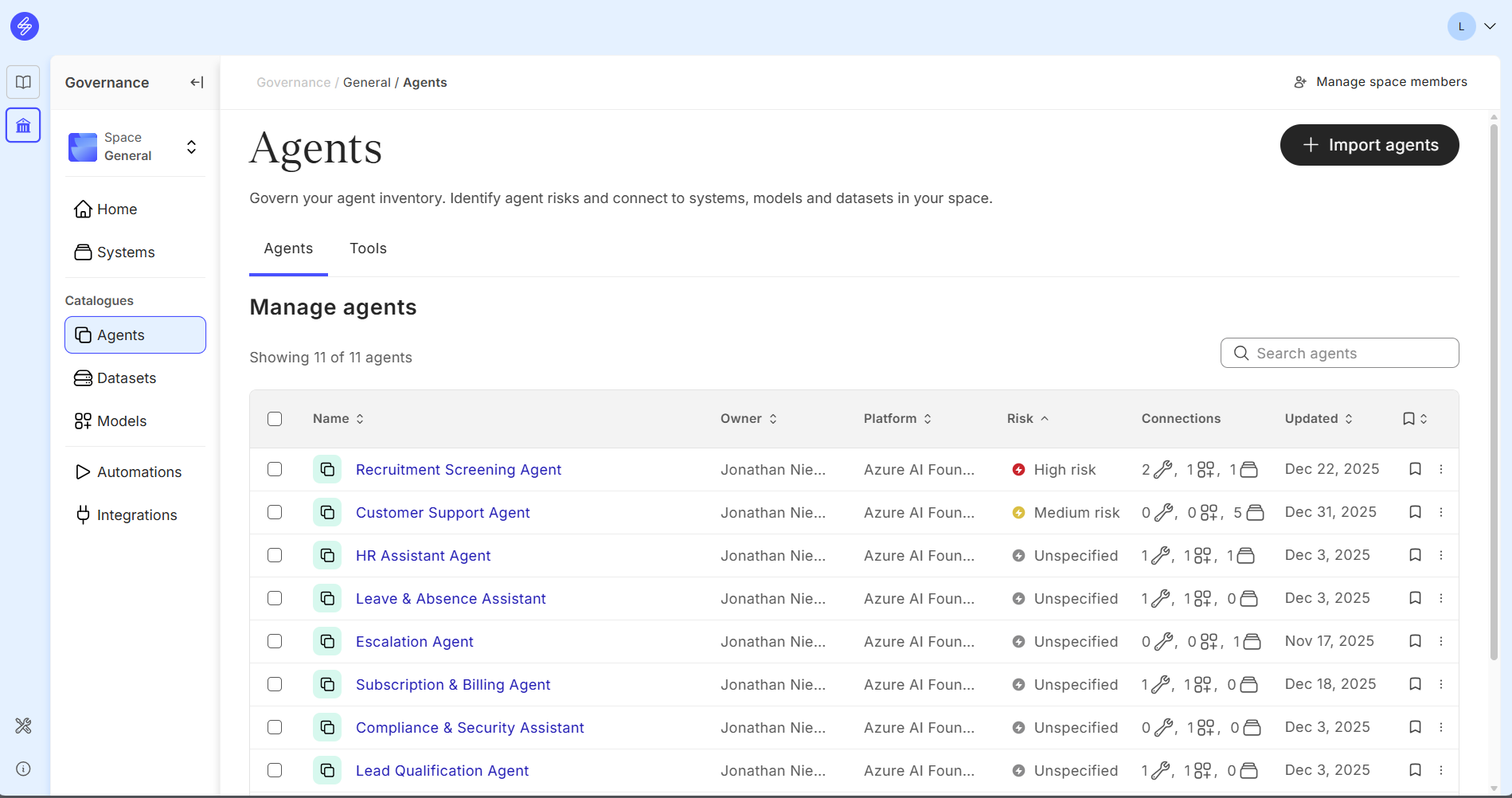

Agent risk levels can be analysed and filtered in the Agent Catalogue main view.

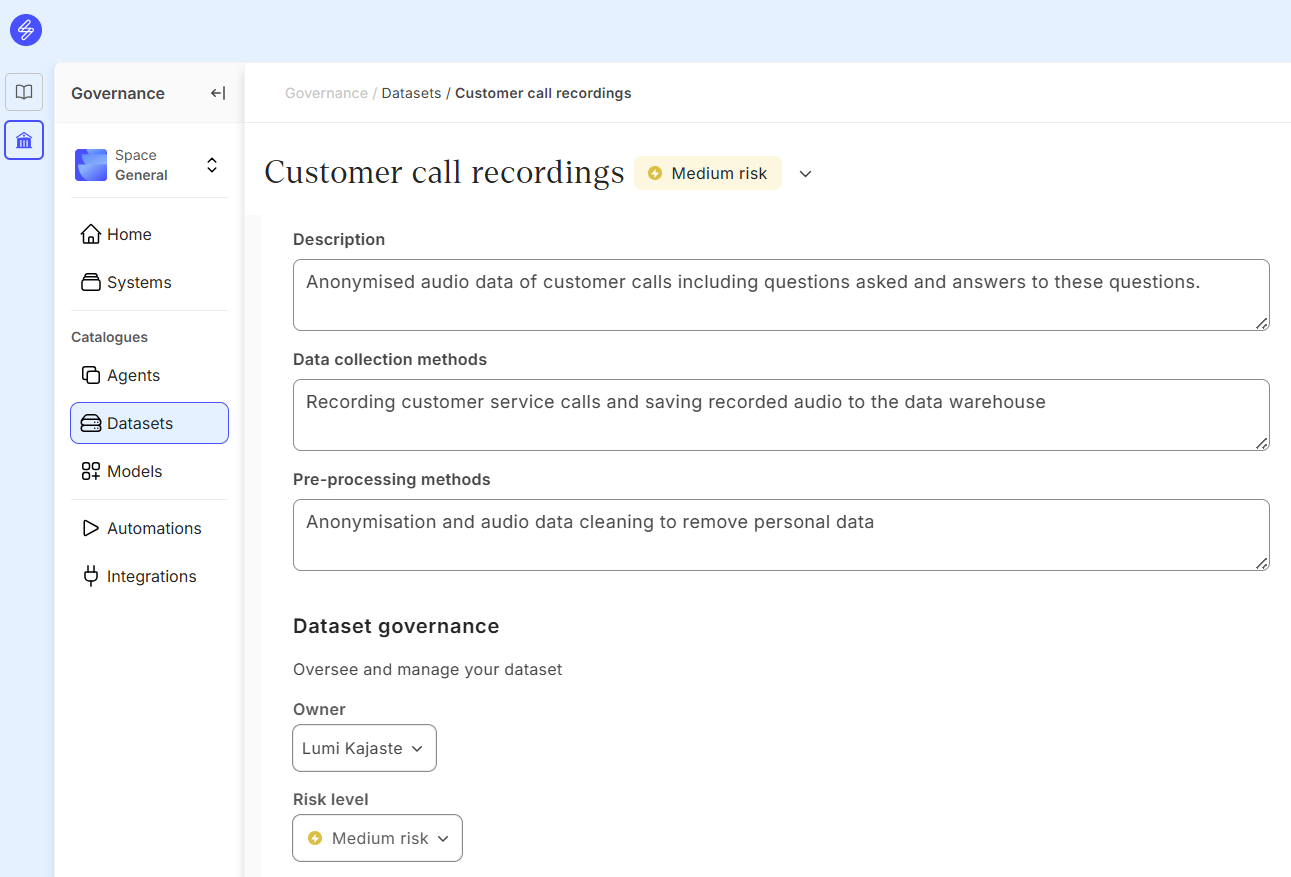

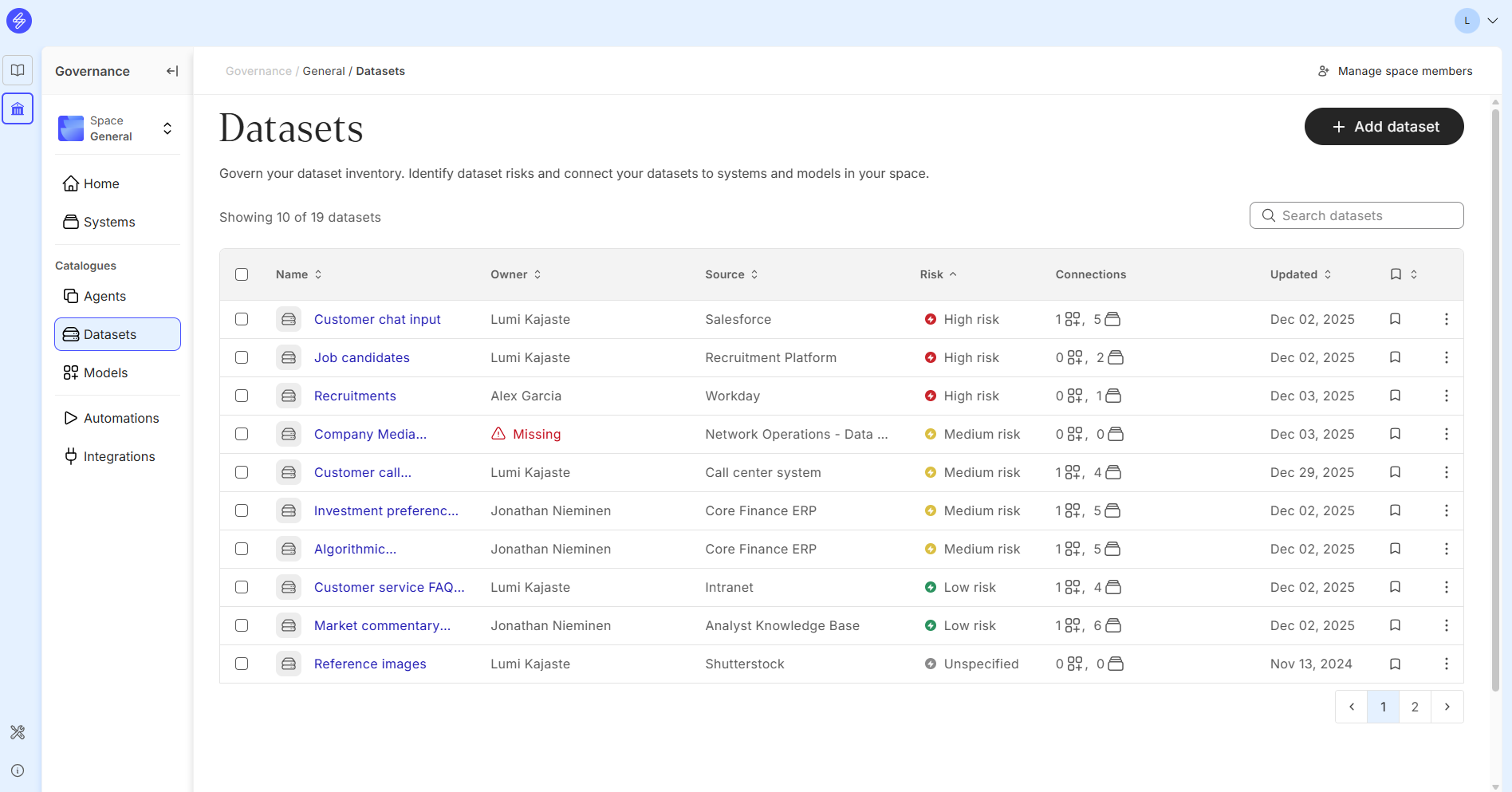

Dataset risk level

Dataset risk levels can be adjusted in the Dataset card Governance section.

Dataset risk levels can be analysed in the Dataset Catalogue main view.

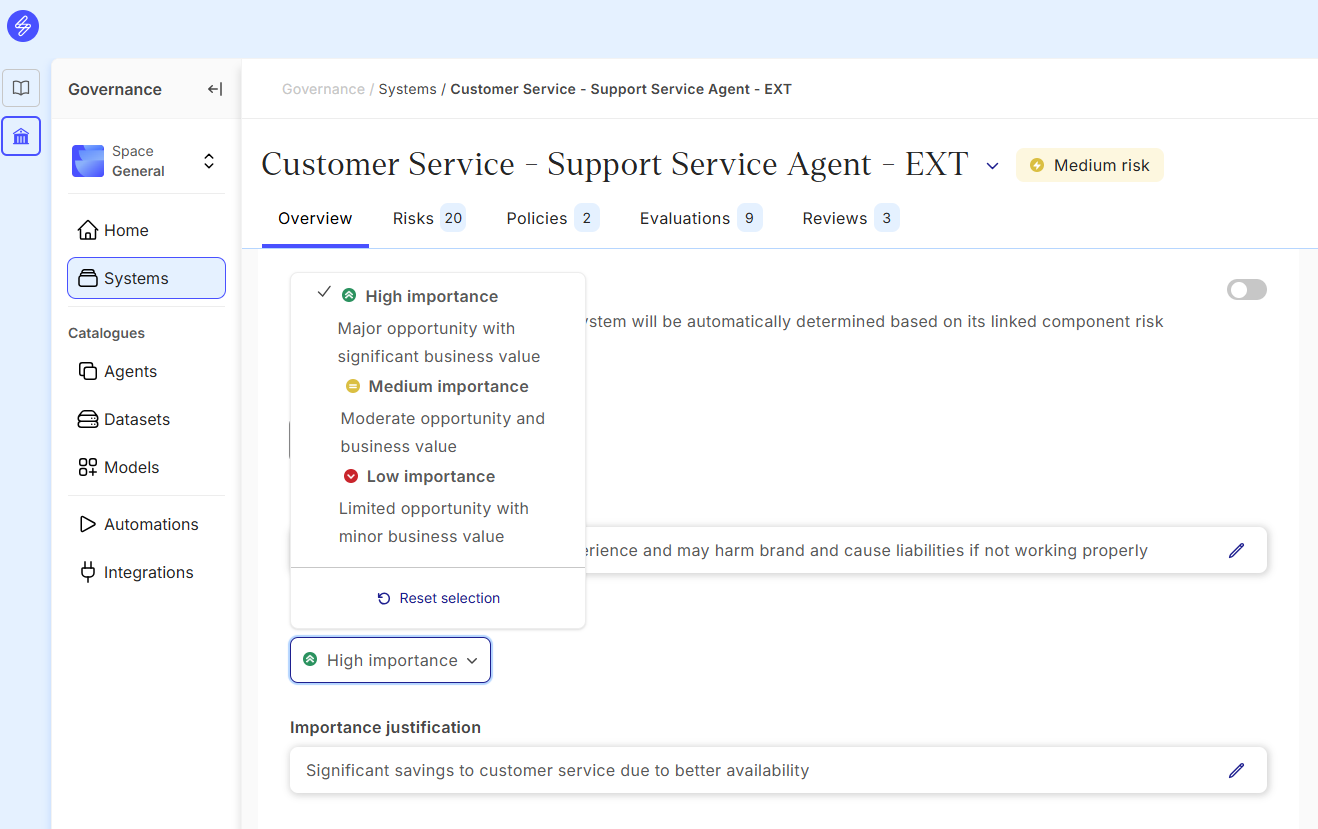

Define the level of importance manually

The level of importance can be set manually for all systems. The company can define their own criteria for evaluating the levels of importance, including monetary and non-monetary benefits to the business, customers and employees. Setting the level of importance helps to manage the AI system portfolio and focus on the AI systems with highest importance and risk level ratios.

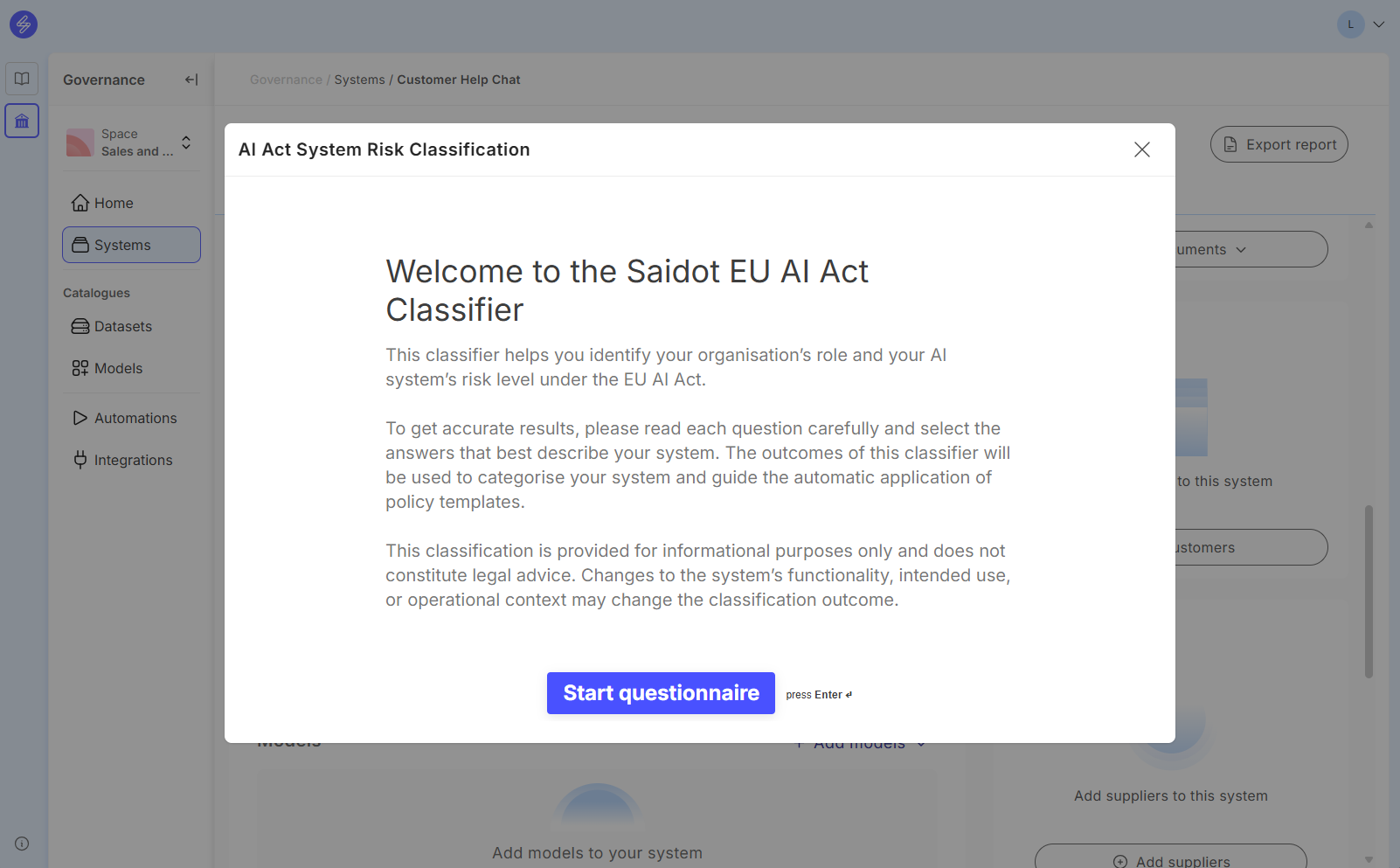

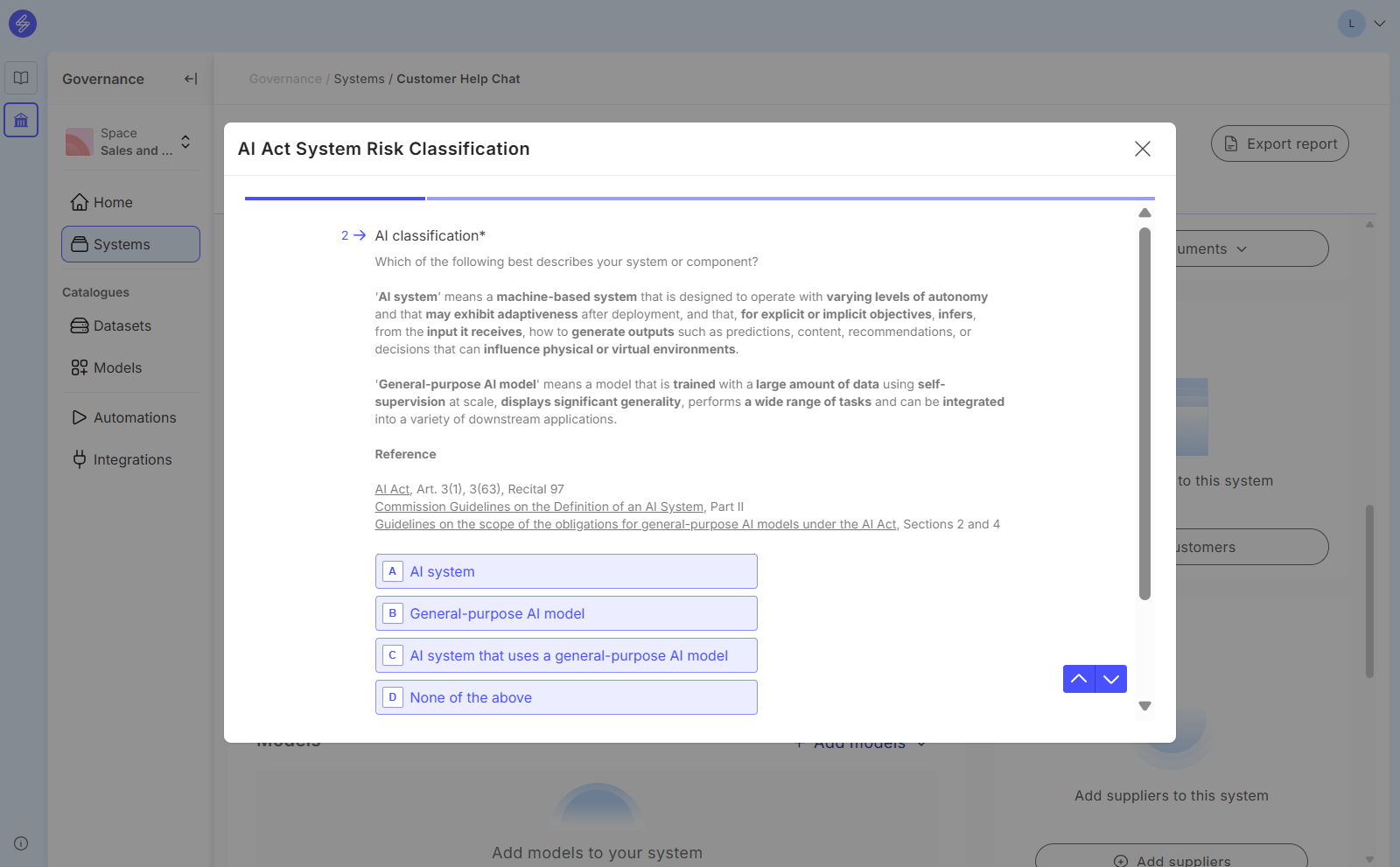

AI Act risk classification

AI systems can also be classified according to the AI Act. This classification workflow is enabled in the Automations.

The workflow will start when clicking Start classification.

The AI Act classifier has several questions with terminology and options clarified.

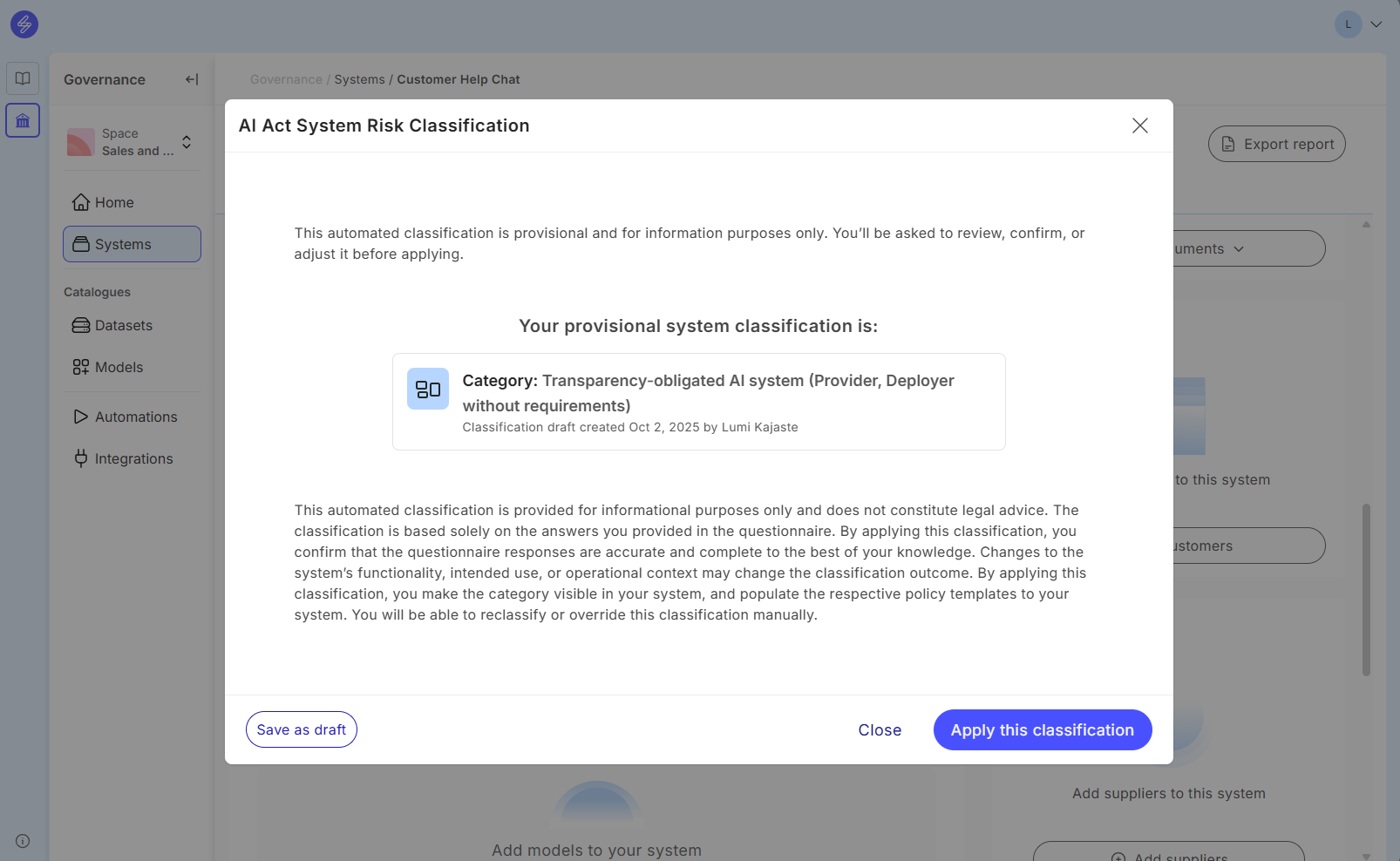

After answering all questions, you will receive a priovisional classification. This classification can be now saved as a draft and reviewed before applying it.

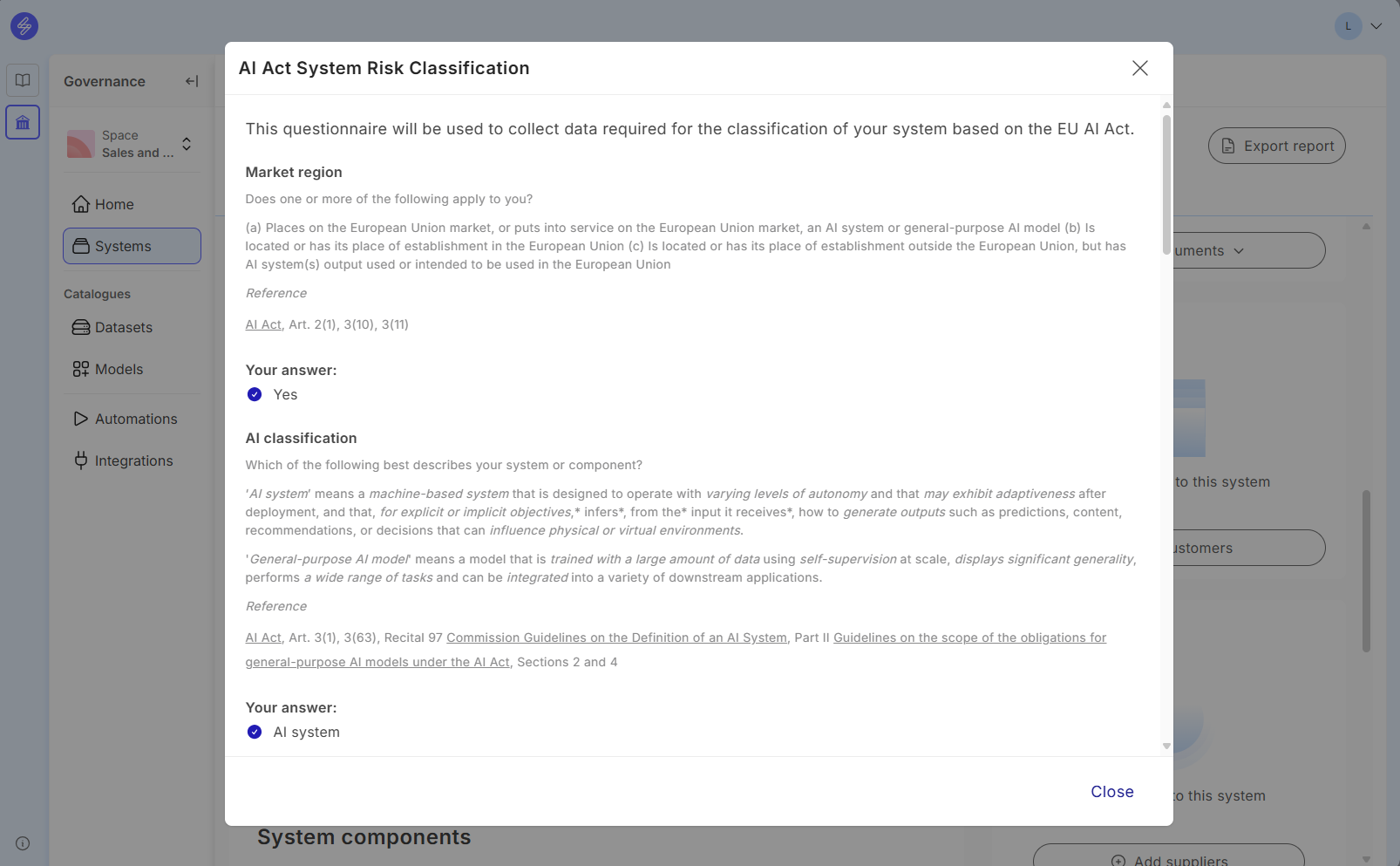

After saving the AI Act Classification as a draft, the given answers can be reviewed as a justification.

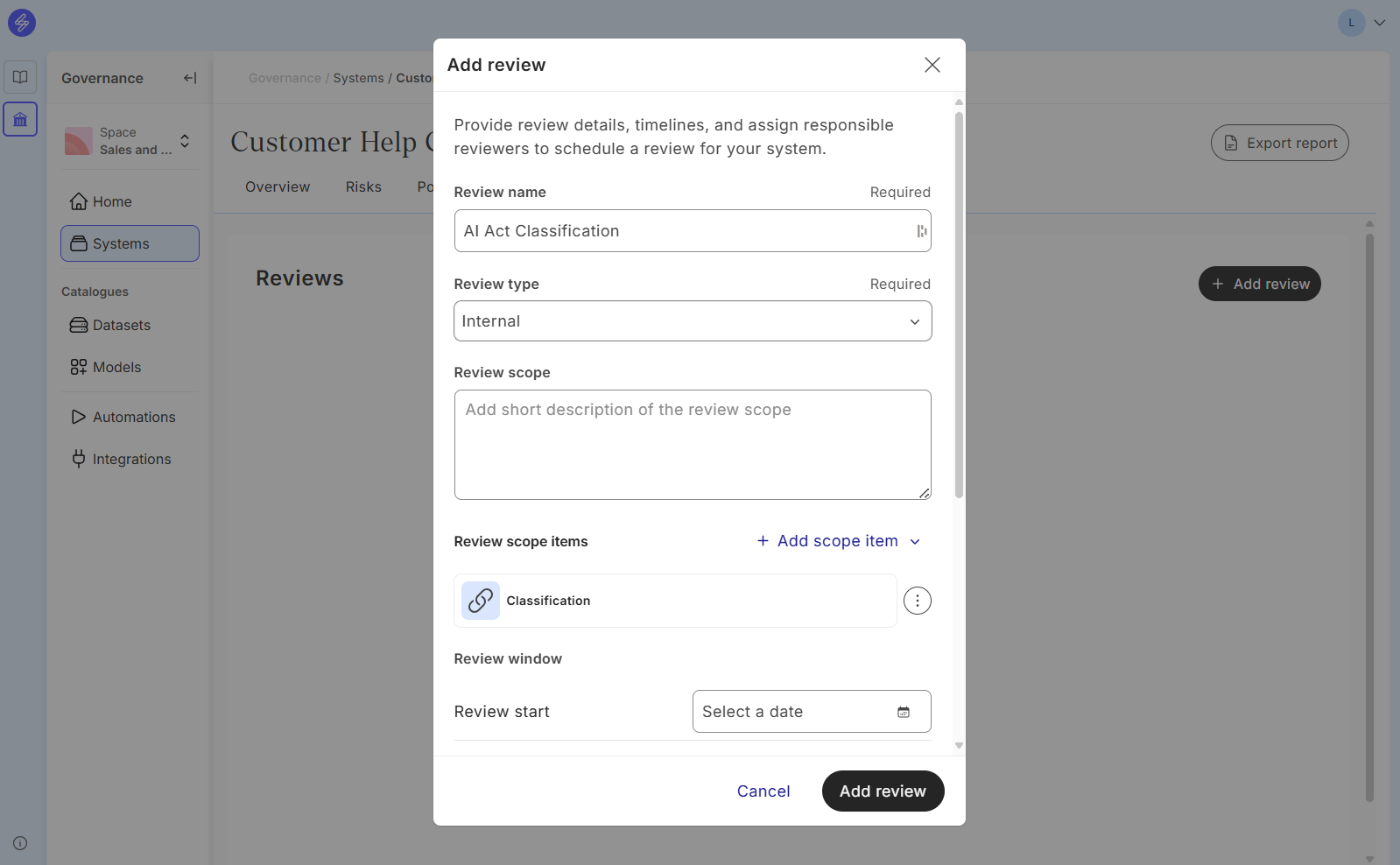

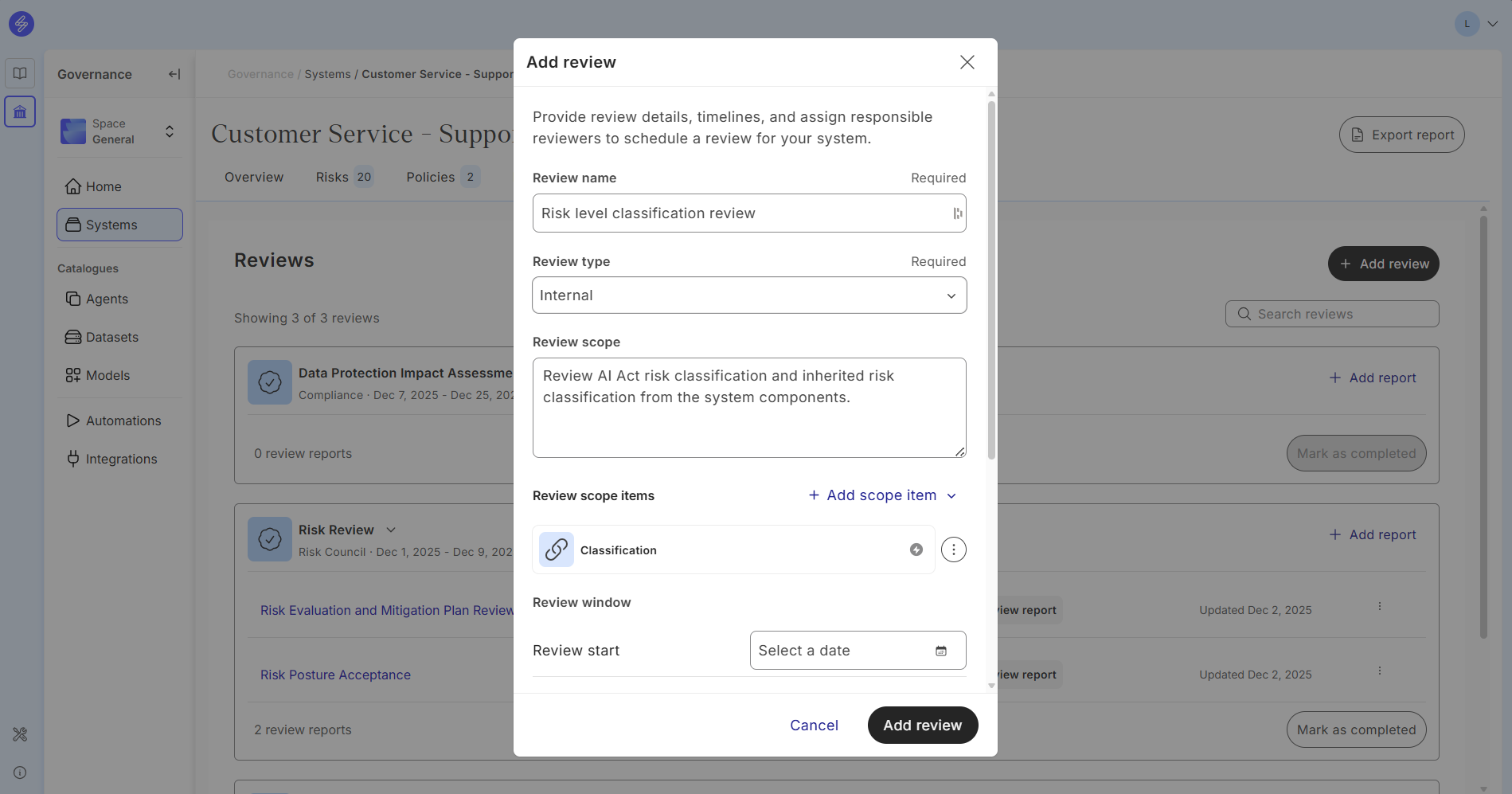

If needed and guided by your organisation AI AI governance process, a review task can be created and assigned to your legal and compliance team by linking the classification to the review scope item.

When you are ready to apply the classification, go back to the Overview page and press “Apply draft classification”.

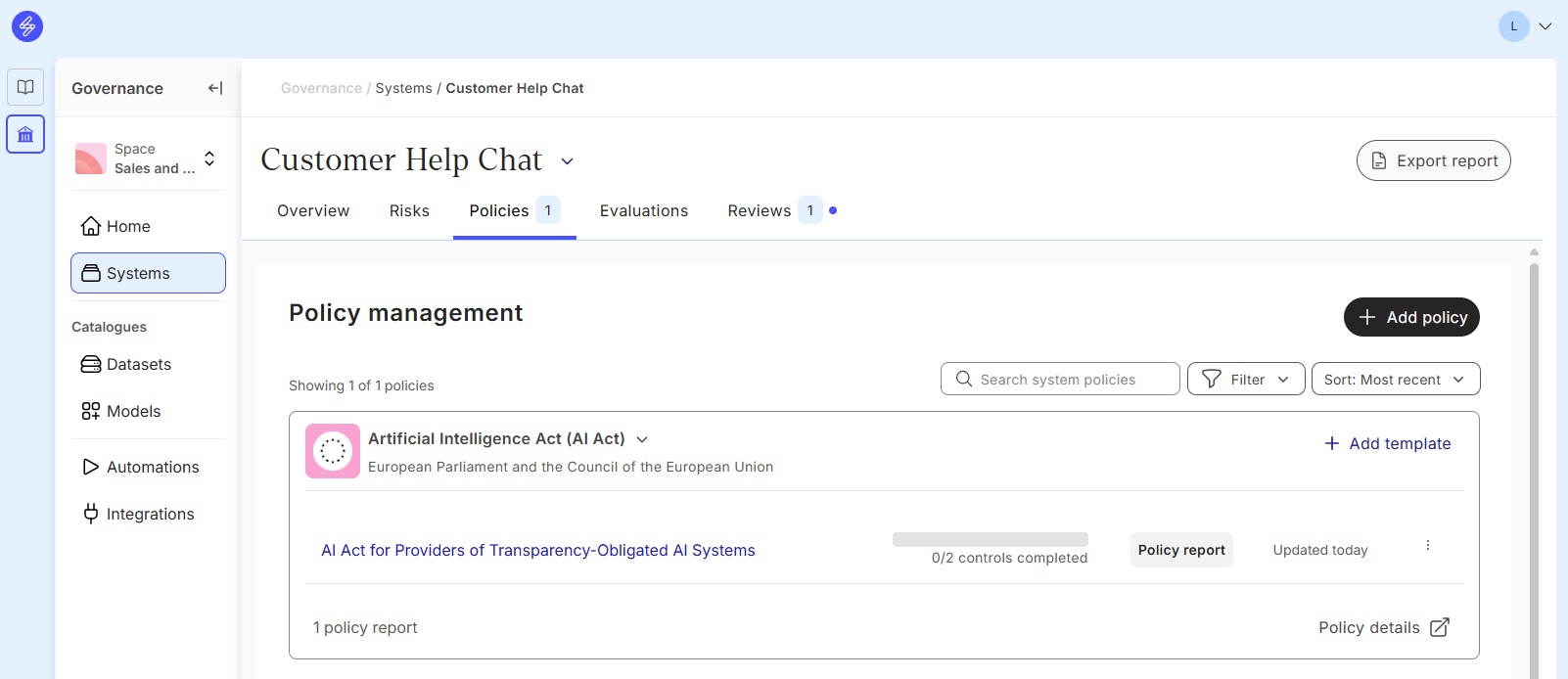

When you cave applied the draft classification, the status of the classification turns Complete and and you can see a new item in the Policies tab.

The Artificial Intelligence Act (AI Act) Policy has now been automatically added to the Policy management tab with the right template. You can review the template by clicking on the template link. This view allows you to follow the progress of the controls and when they have been completed.

The template contains the controls that are applicable based on your classification. Learn more about how to prove your compliance and use the Evidence Store to reuse evidence with AI-based recommendations.

AI system classification review and reporting

A review can be scoped to audit the AI system classification, if required in the organisations AI governance process.

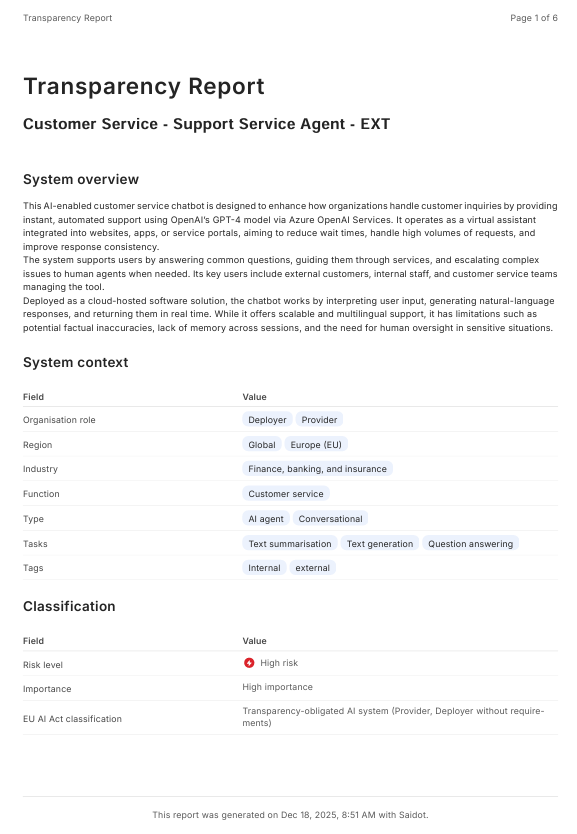

The outcome of the classification is visible also in the Transparency report.

Analyse the AI system inventory based on risk level and importance

AI system inventory can be managed more effectively when AI systems are classified. The AI systems can be sorted and filtered based on classification.